-

07 Building Webster’s Lab V1 – Creating the vSphere Distributed Switch

Updated 12-Dec-2019

Before creating a vSphere Distributed Switch (vDS), a Datacenter is required.

Verify you are connected and logged in to the vCenter console.

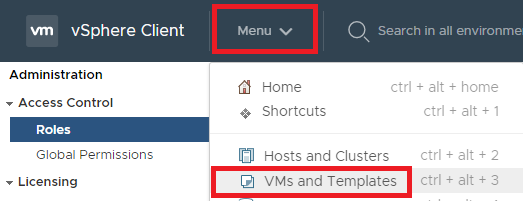

Click Menu and click VMs and Templates, as shown in Figure 1.

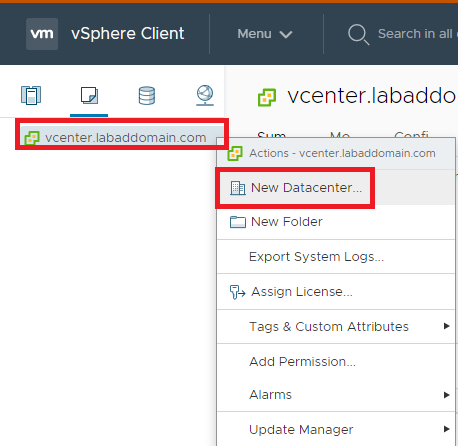

Figure 1 Right-click the vCenter VM and click New Datacenter…, as shown in Figure 2.

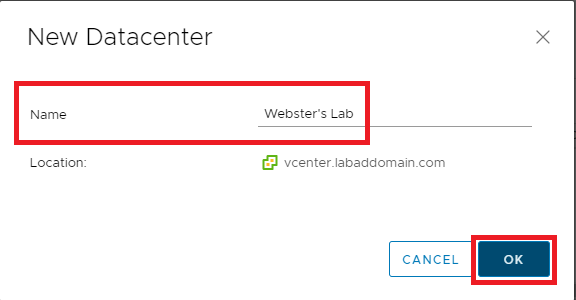

Figure 2 Enter a Name for the Datacenter and click OK, as shown in Figure 3.

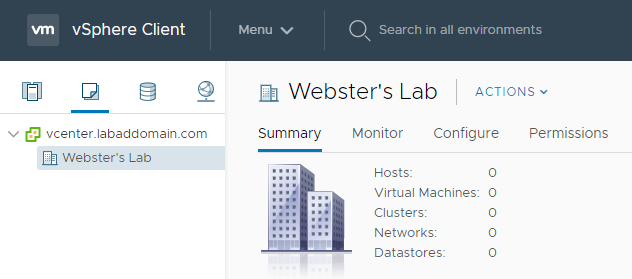

Figure 3 The new Datacenter is shown in the vCenter console, as shown in Figure 4.

Figure 4 Now the hosts are added to vCenter.

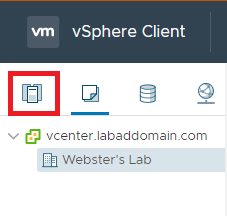

Click the Hosts and Clusters node in vCenter, as shown in Figure 5.

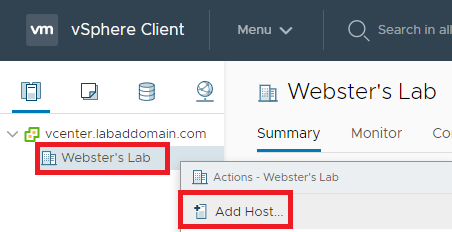

Figure 5 Right-click the Datacenter and click Add Host…, as shown in Figure 6.

Figure 6 Enter the Hostname or IP address or the host to add and click Next, as shown in Figure 7.

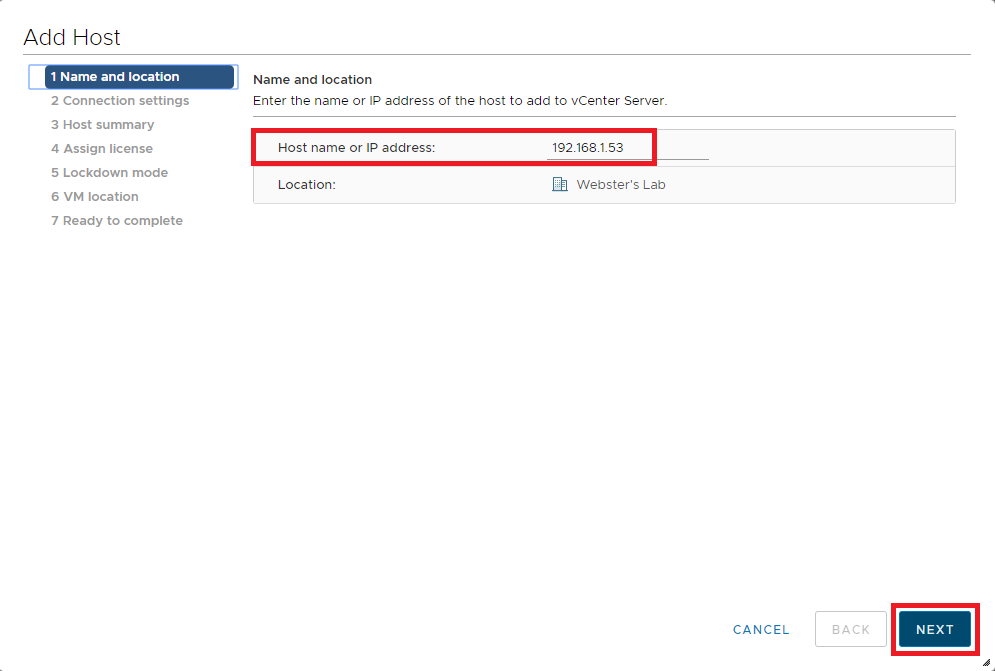

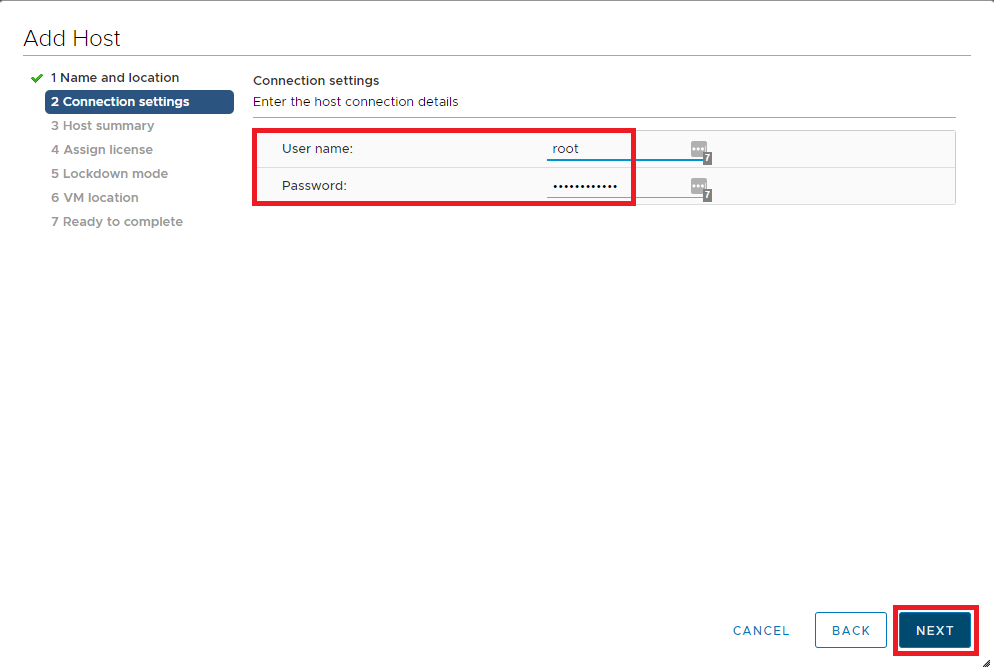

Figure 7 Enter a User name and Password to connect to the ESXi host and click Next, as shown in Figure 8.

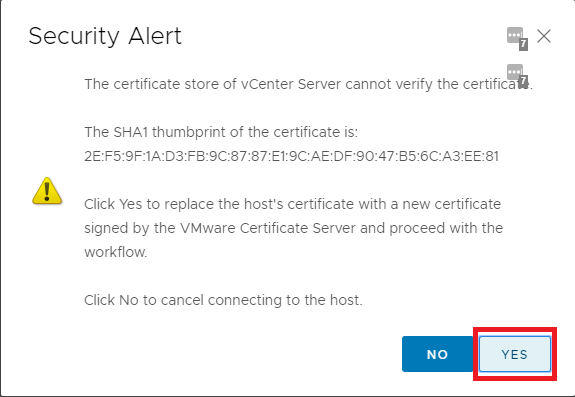

Figure 8 Because of the host’s self-signed certificate, click Yes on the Security Alert popup, as shown in Figure 9.

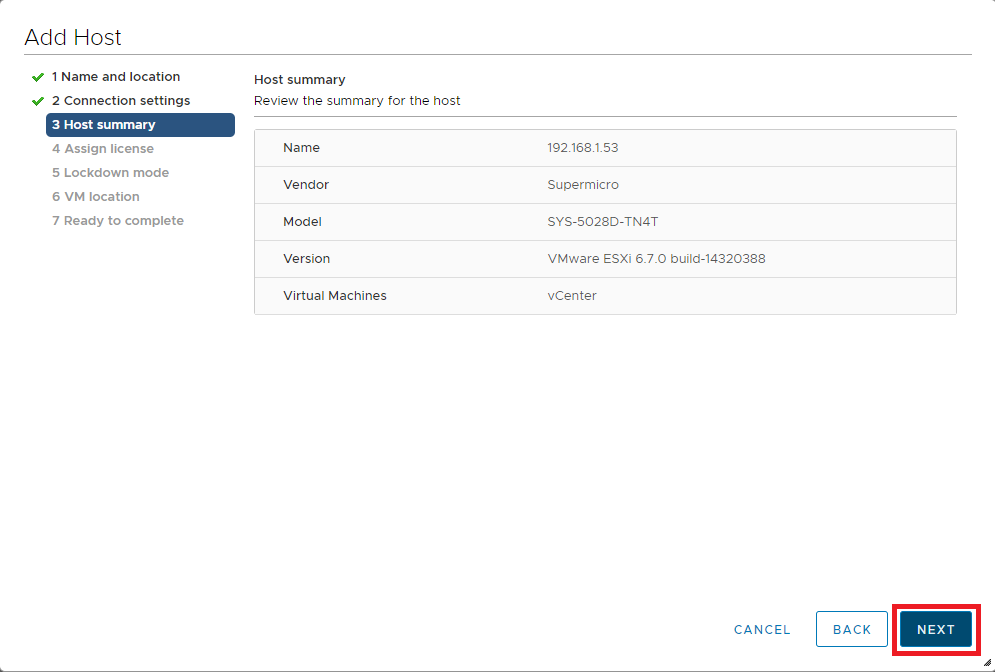

Figure 9 Click Next, as shown in Figure 10.

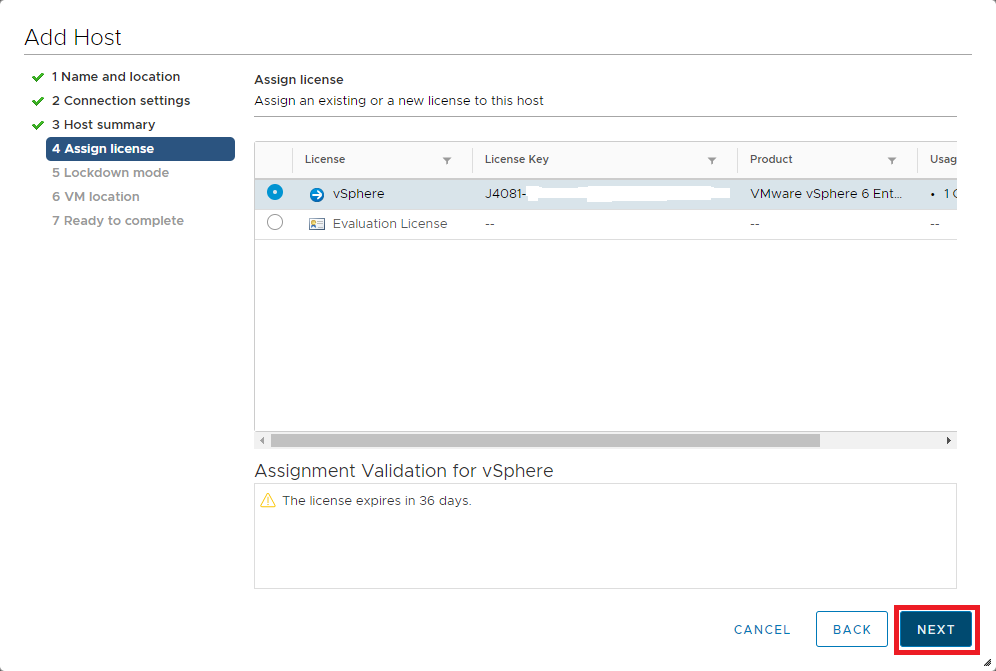

Figure 10 Select the license to assign the host and click Next, as shown in Figure 11.

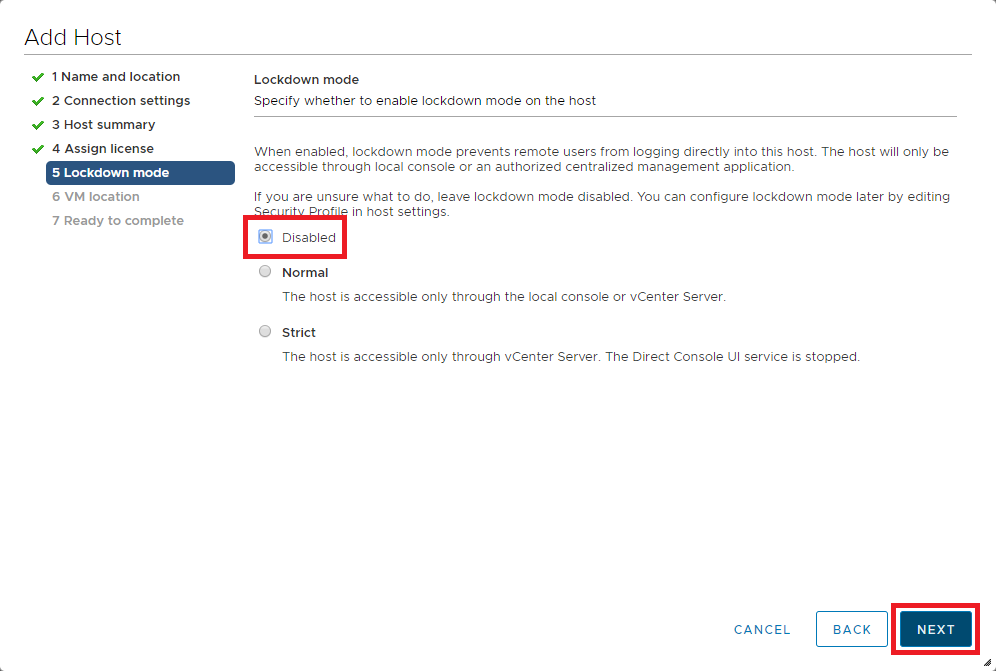

Figure 11 Selected the preferred Lockdown mode and click Next, as shown in Figure 12.

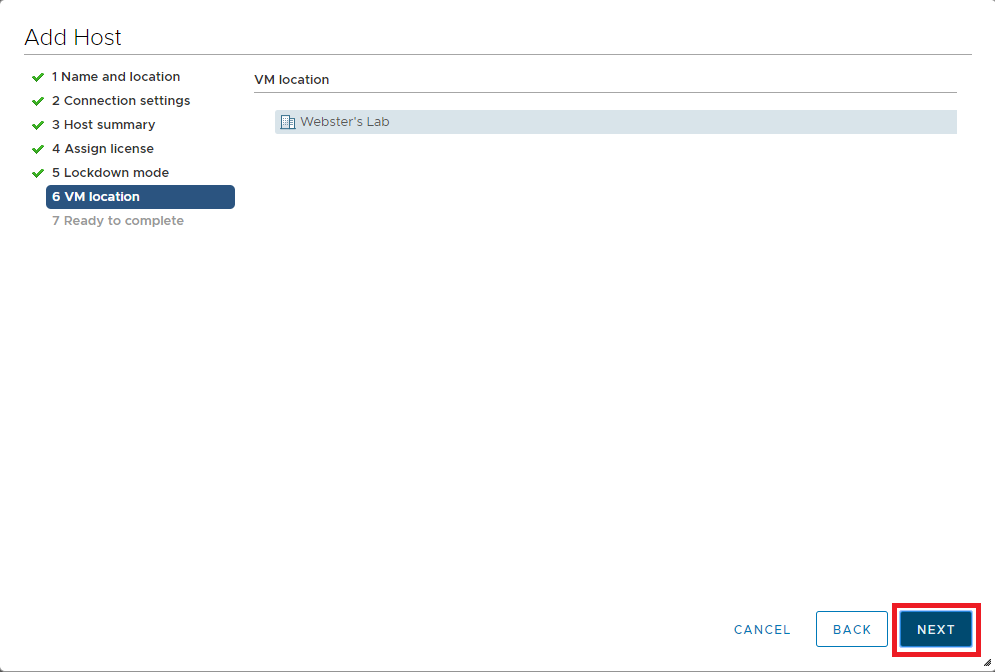

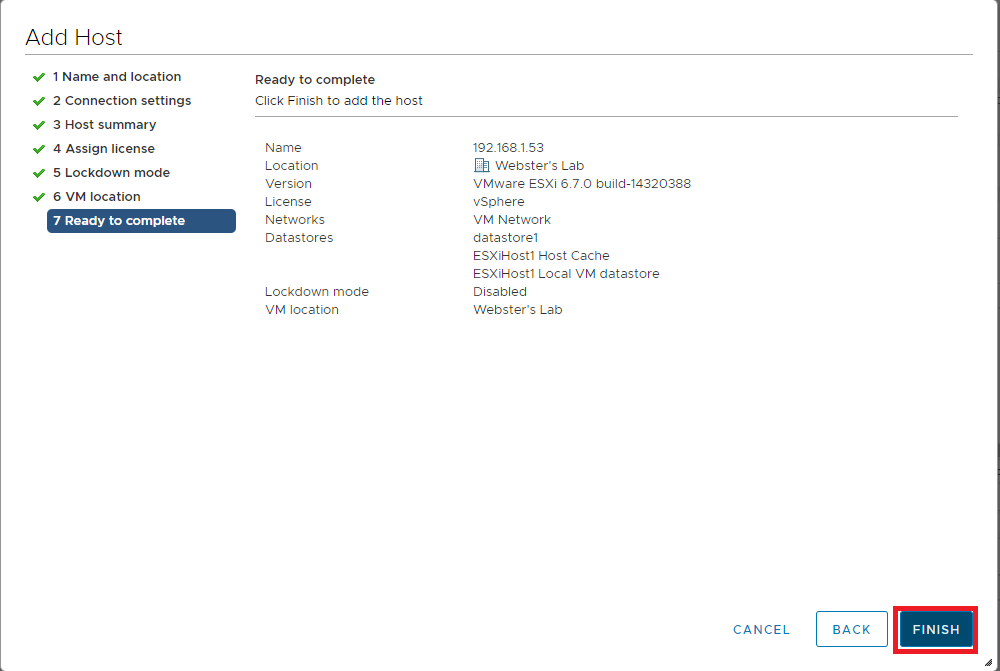

Figure 12 Click Next, as shown in Figure 13.

Figure 13 If all the information is correct, click Finish, as shown in Figure 14. If the information is not correct, click Back, correct the information, and then continue.

Figure 14 Repeat the steps outlined in Figures 6 through 14 to add additional hosts to vCenter.

Next is creating a Cluster.

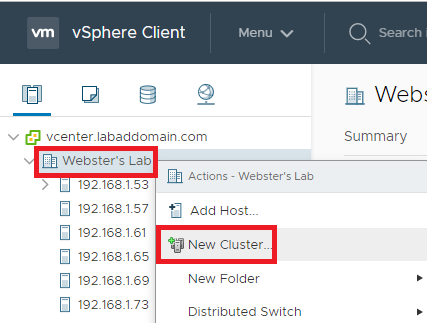

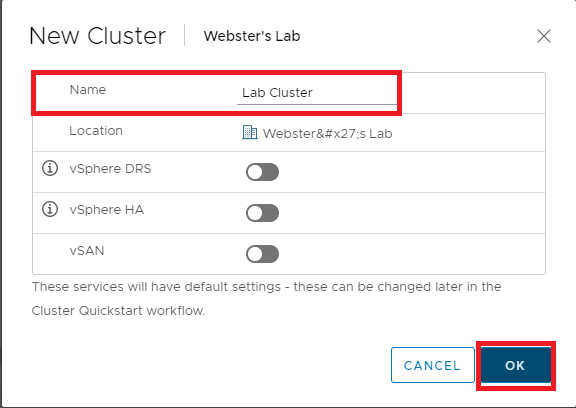

Once you add all hosts to vCenter, right-click the Datacenter and click New Cluster…, as shown in Figure 15.

Figure 15 Enter a Name for the cluster and click OK, as shown in Figure 16.

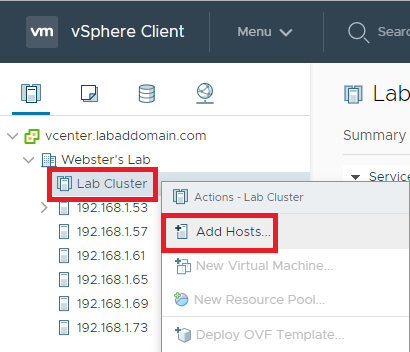

Figure 16 Right-click the new cluster and click Add Hosts…, as shown in Figure 17.

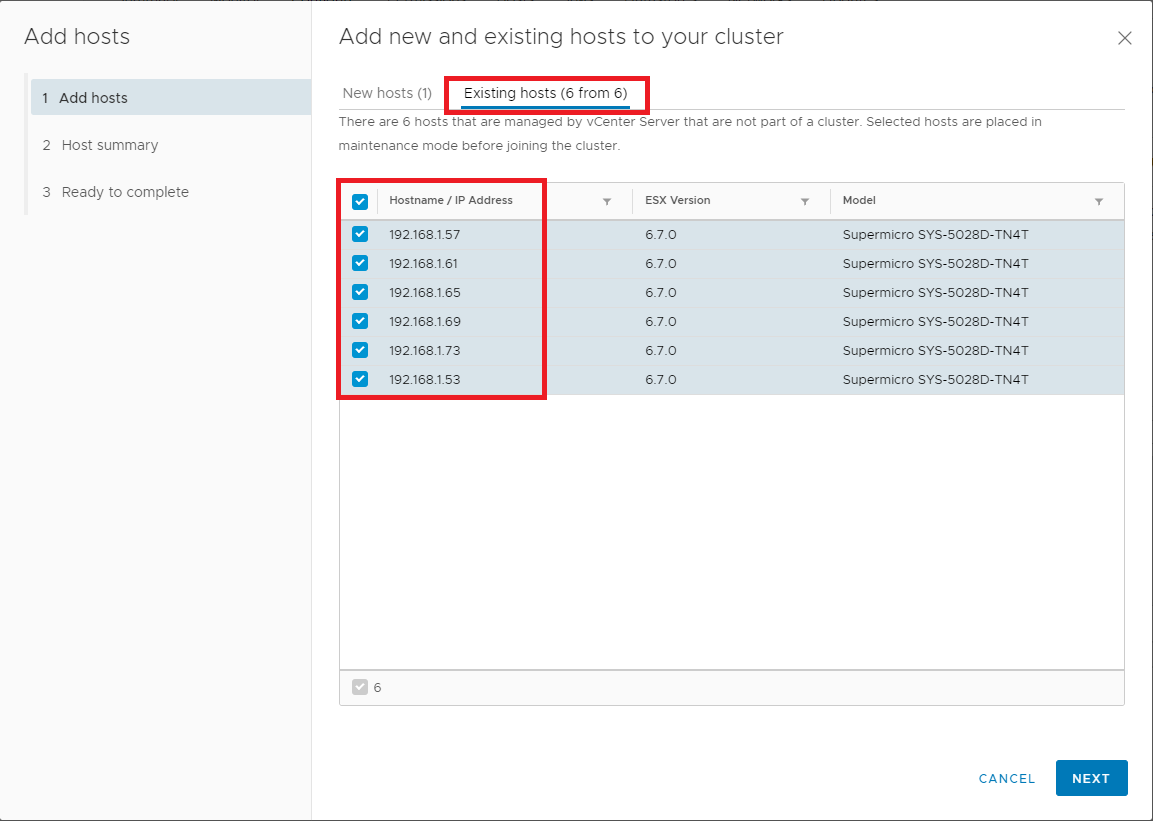

Figure 17 Select Existing hosts, select the hosts to add, and click Next, as shown in Figure 18.

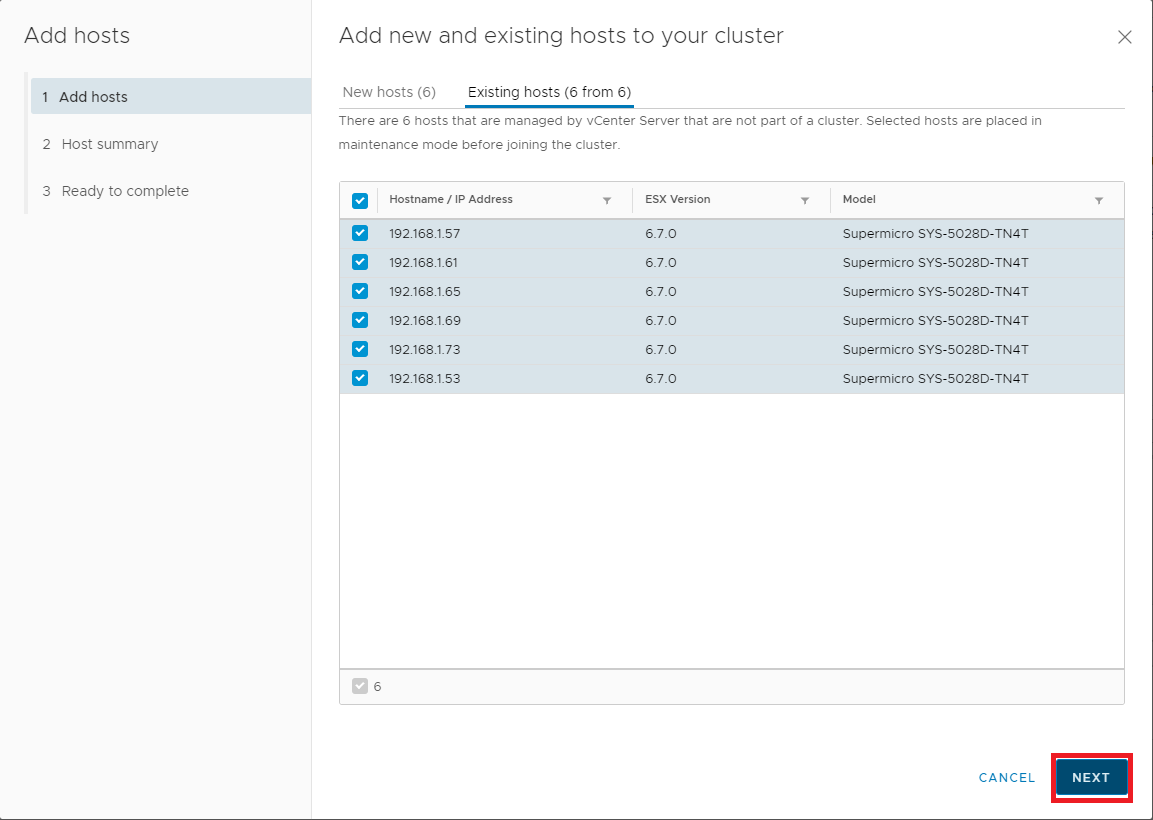

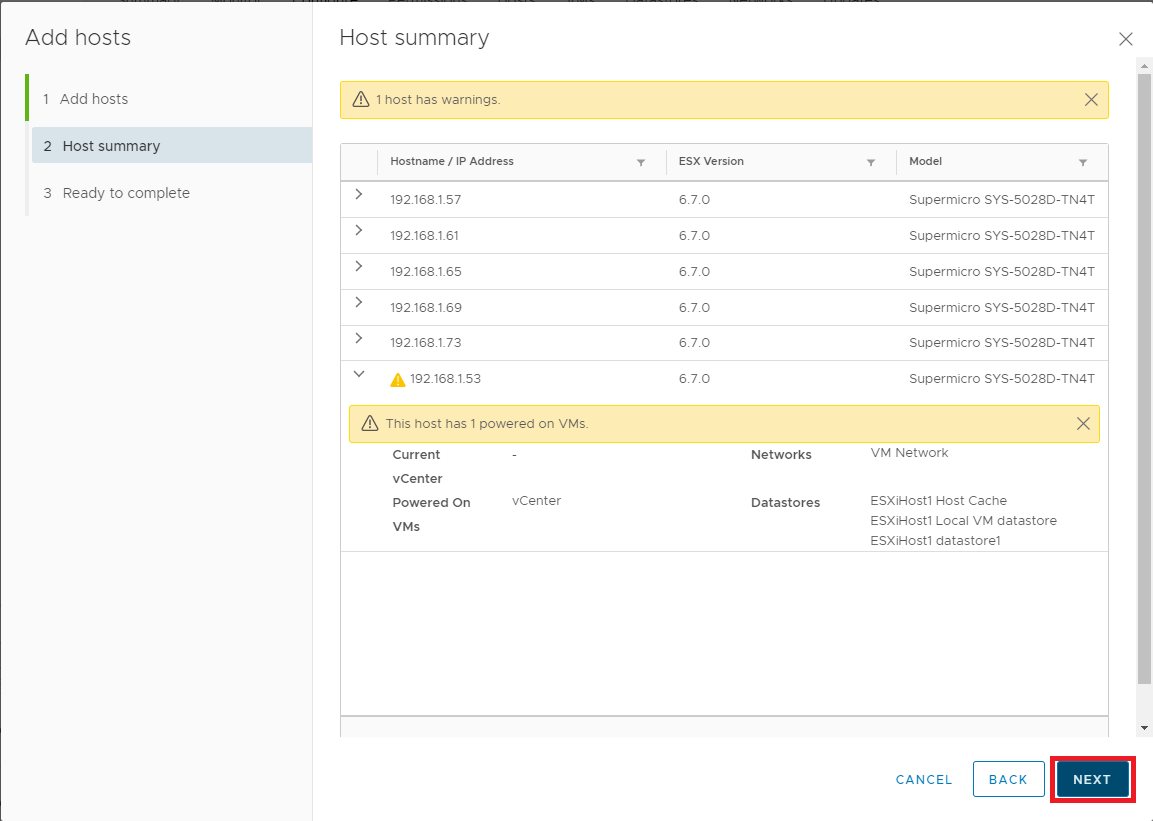

Figure 18 Click Next, as shown in Figure 19.

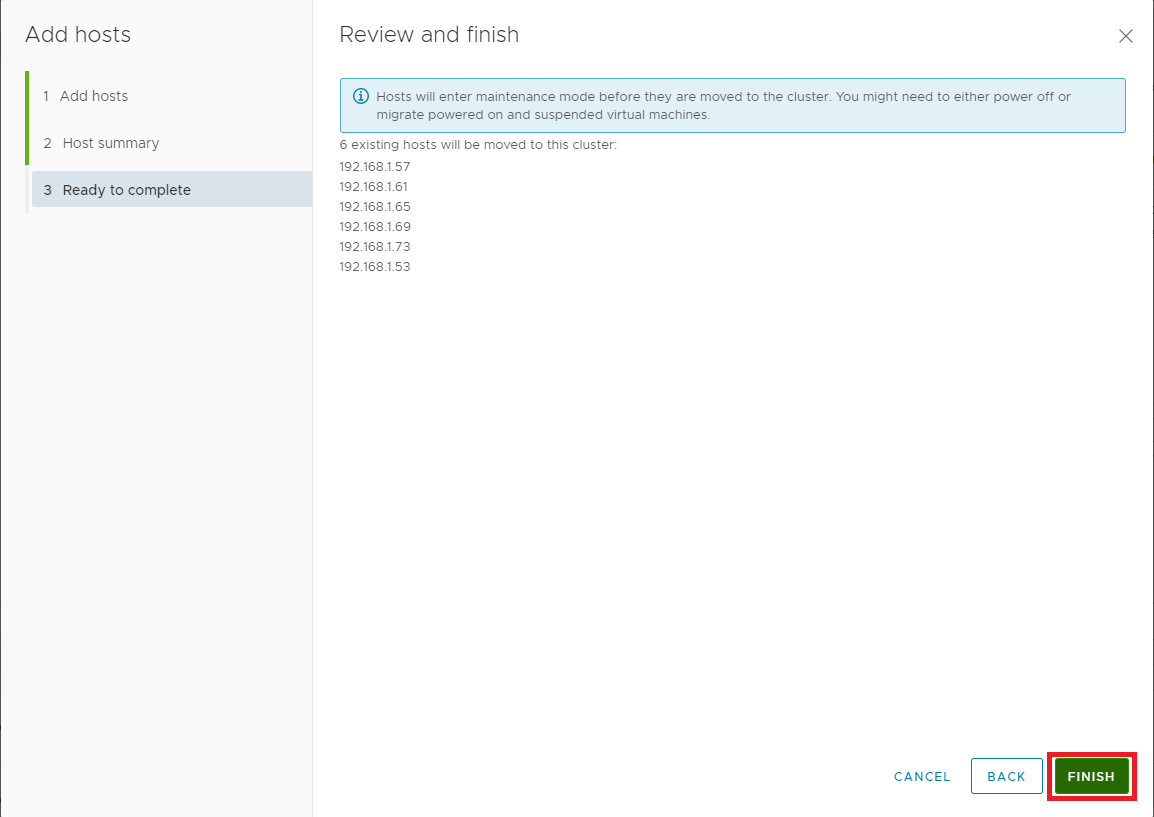

Figure 19 If all the information is correct, click Finish, as shown in Figure 20. If the information is not correct, click Back, correct the information, and then continue.

Figure 20 If all the information is correct, click Finish, as shown in Figure 21. If the information is not correct, click Back, correct the information, and then continue.

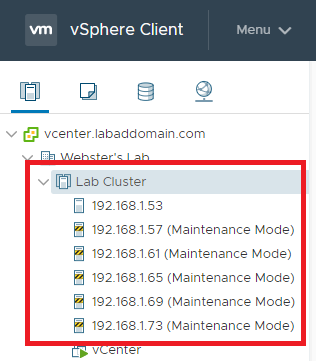

Figure 21 In the vCenter console, you can see the hosts added to the cluster, as shown in Figure 22.

Figure 22 Now on to the fun stuff: Networking.

I tried to come up with my explanation of port groups, virtual switches, physical NICs, VMkernel NICs, TCP/IP stacks, uplinks, and other items but found VMware already had something even I could understand.

I found the following information in the vSphere Networking PDF, Copyright VMware, Inc., available at https://docs.vmware.com/en/VMware-vSphere/6.7/com.vmware.vsphere.networking.doc/GUID-2B11DBB8-CB3C-4AFF-8885-EFEA0FC562F4.html. The following is from the Networking Concepts Overview section (with grammar corrections).

Networking Concepts Overview

A few concepts are essential for a thorough understanding of virtual networking. If you are new to ESXi, it is helpful to review these concepts.

Physical Network A network of physical machines that are connected so that they can send data to and receive data from each other. VMware ESXi runs on a physical machine.

Virtual Network A network of virtual machines running on a physical machine that are connected logically to each other so that they can send data to and receive data from each other. Virtual machines can connect to the virtual networks that you create when you add a network.

Opaque Network An opaque network is a network created and managed by a separate entity outside of vSphere. For example, logical networks that are created and managed by VMware NSX® appear in vCenter Server as opaque networks of the type nsx.LogicalSwitch. You can choose an opaque network as the backing for a VM network adapter. To manage an opaque network, use the management tools associated with the opaque network, such as VMware NSX® Manager™ or the VMware NSX® API™ management tools.

Physical Ethernet Switch It manages network traffic between machines on the physical network. A switch has multiple ports, each of which can connect to a single machine or another switch on the network. Each port can be configured to behave in certain ways depending on the needs of the machine connected to it. The switch learns which hosts are connected to which of its ports and uses that information to forward traffic to the correct physical machines. Switches are the core of a physical network. Multiple switches can connect to form larger networks.

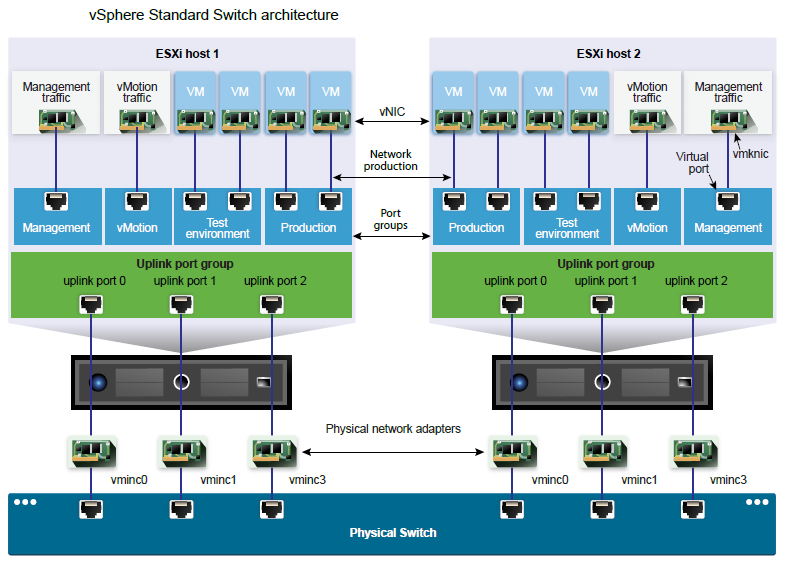

vSphere Standard Switch It works much like a physical Ethernet switch. It detects which virtual machines are logically connected to each of its virtual ports and uses that information to forward traffic to the correct virtual machines. A vSphere standard switch can connect to physical switches by using physical Ethernet adapters, also referred to as uplink adapters, to join virtual networks with physical networks. This type of connection is similar to connecting physical switches to create a larger network. Even though a vSphere standard switch works much like a physical switch, it does not have some of the advanced functionality of a physical switch.

Standard Port Group It specifies port configuration options such as bandwidth limitations and VLAN tagging policies for each member port. Network services connect to standard switches through port groups. Port groups define how a connection is made through the switch to the network. Typically, a single standard switch is associated with one or more port groups.

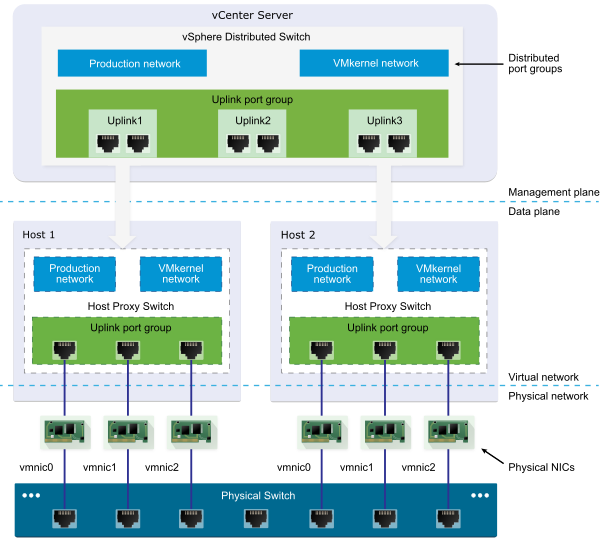

vSphere Distributed Switch It acts as a single switch across all associated hosts in a data center to provide centralized provisioning, administration, and monitoring of virtual networks. You configure a vSphere distributed switch on the vCenter Server system, and the configuration populates across all hosts that are associated with the switch. This lets virtual machines to maintain consistent network configuration as they migrate across multiple hosts.

Host Proxy Switch A hidden standard switch that resides on every host that is associated with a vSphere distributed switch. The host proxy switch replicates the networking configuration set on the vSphere distributed switch to the particular host.

Distributed Port A port on a vSphere distributed switch that connects to a host’s VMkernel or to a virtual machine’s network adapter.

Distributed Port Group A port group associated with a vSphere distributed switch and specifies port configuration options for each member port. Distributed port groups define how a connection is made through the vSphere distributed switch to the network.

NIC Teaming NIC teaming occurs when multiple uplink adapters are associated with a single switch to form a team. A team can either share the load of traffic between physical and virtual networks among some or all of its members or provide passive failover in the event of a hardware failure or a network outage.

VLAN VLAN enables a single physical LAN segment to be further segmented so that groups of ports are isolated from one another as if they were on physically different segments. The standard is 802.1Q.

VMkernel TCP/IP

Networking Layer The VMkernel networking layer provides connectivity to hosts and handles the standard infrastructure traffic of vSphere vMotion, IP storage, Fault Tolerance, and Virtual SAN.

IP Storage Any form of storage that uses TCP/IP network communication as its foundation. iSCSI can be used as a virtual machine datastore, and NFS can be used as a virtual machine datastore and for direct mounting of .ISO files, which are presented as CD-ROMs to virtual machines.

TCP Segmentation Offload TCP Segmentation Offload, TSO, allows a TCP/IP stack to emit large frames (up to 64KB) even though the maximum transmission unit (MTU) of the interface is smaller. The network adapter then separates the large frame into MTU-sized frames and prepends an adjusted copy of the initial TCP/IP headers.

VMware Standard Switch Architecture

vSphere Distributed Switch Architecture My TinkerTry server has two 1Gb NICs and two 10Gb NICs. I want to use all four NICs. Two vDSes are required; one for the two 1Gb NICs and one for the two 10Gb NICs.

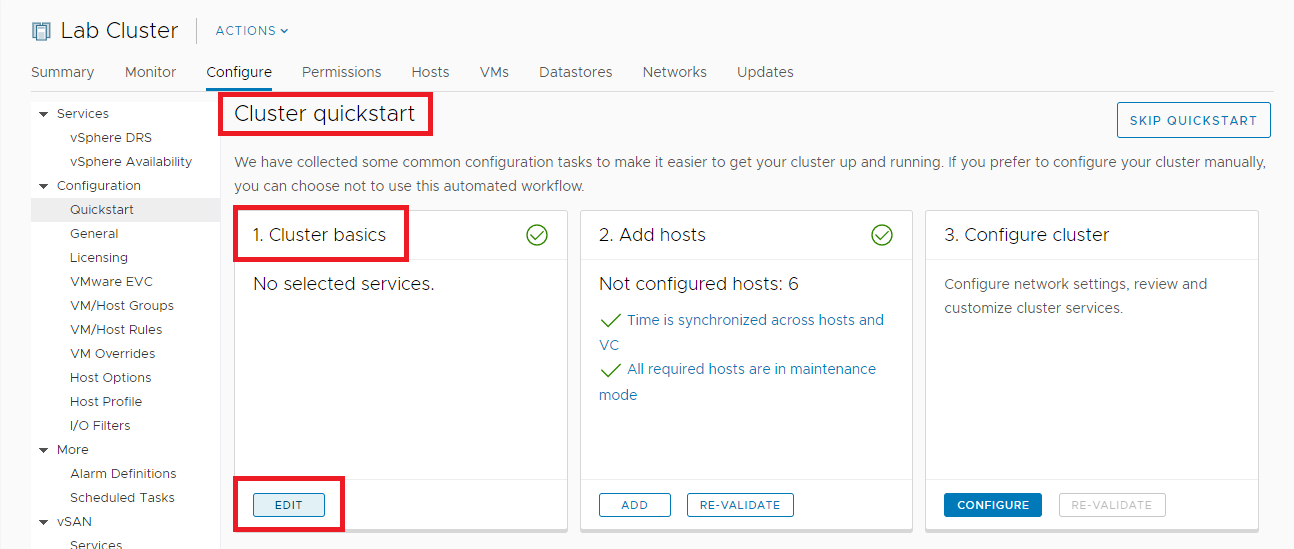

In vCenter, in the right pane, in Cluster quickstart, click the Edit button in the Cluster basics box as shown in Figure 23.

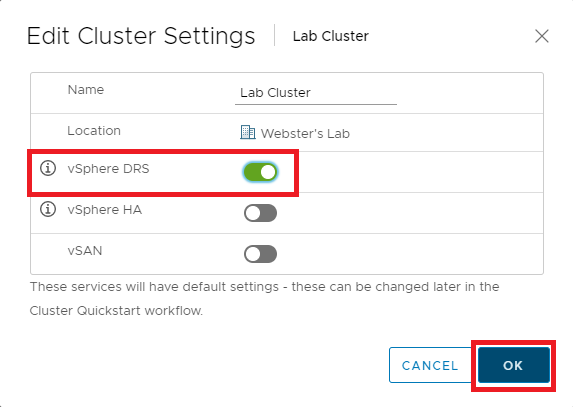

Figure 23 Enable vSphere DRS (to allow the automatic creation of vMotion in the next step) and click OK as shown in Figure 24.

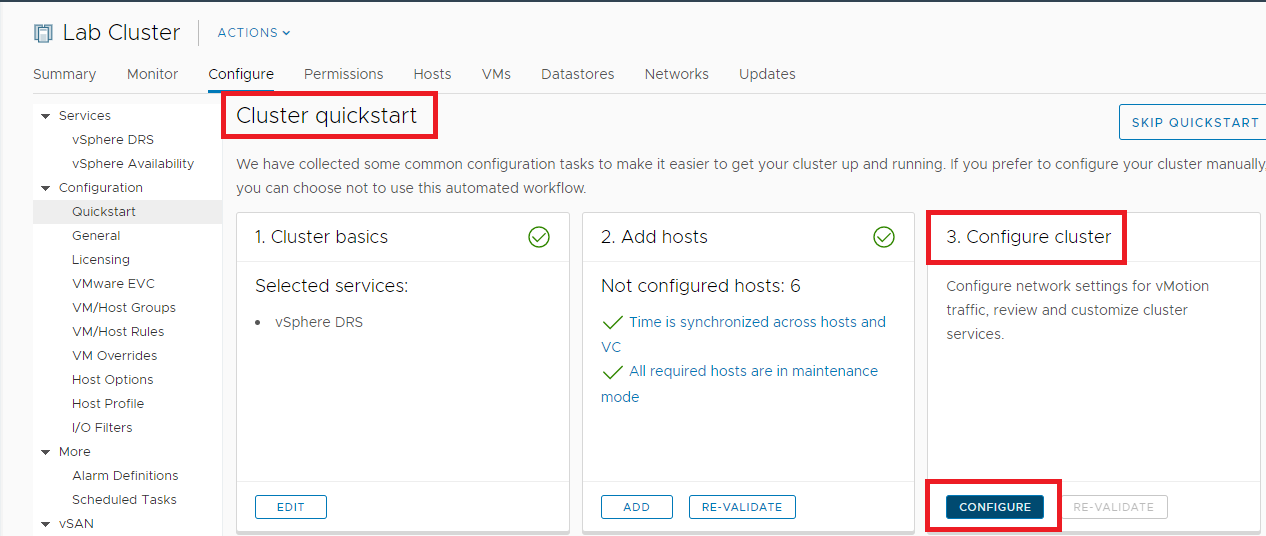

Figure 24 Still in Cluster quickstart, click the Configure button in the Configure cluster box as shown in Figure 25.

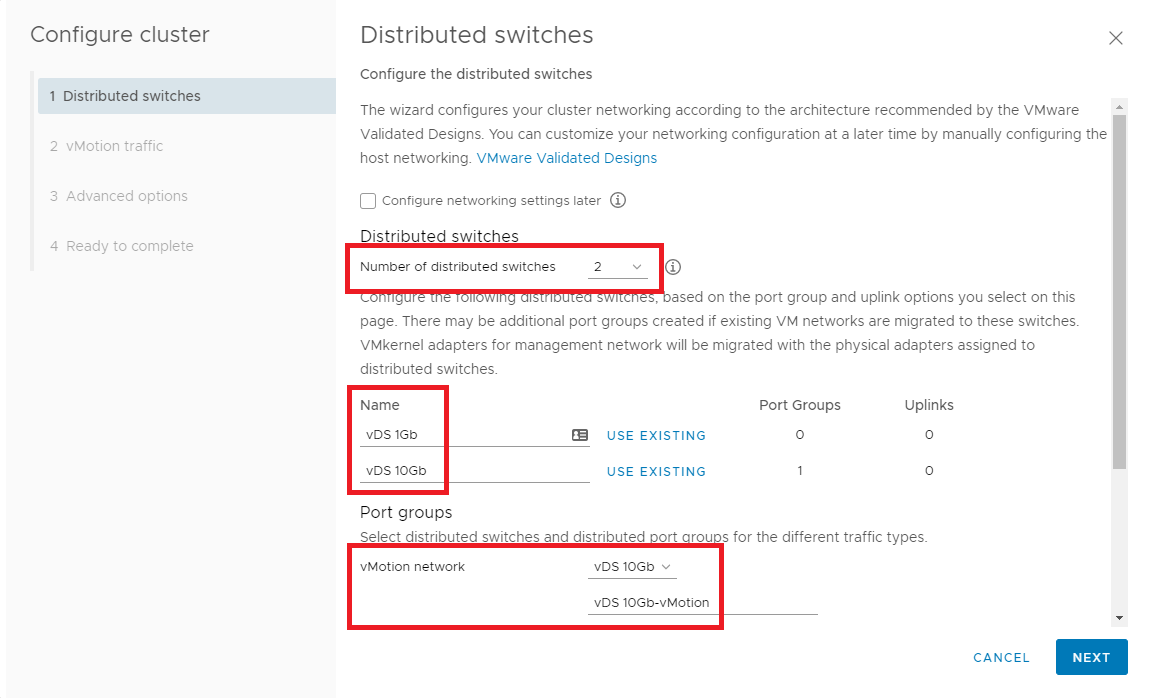

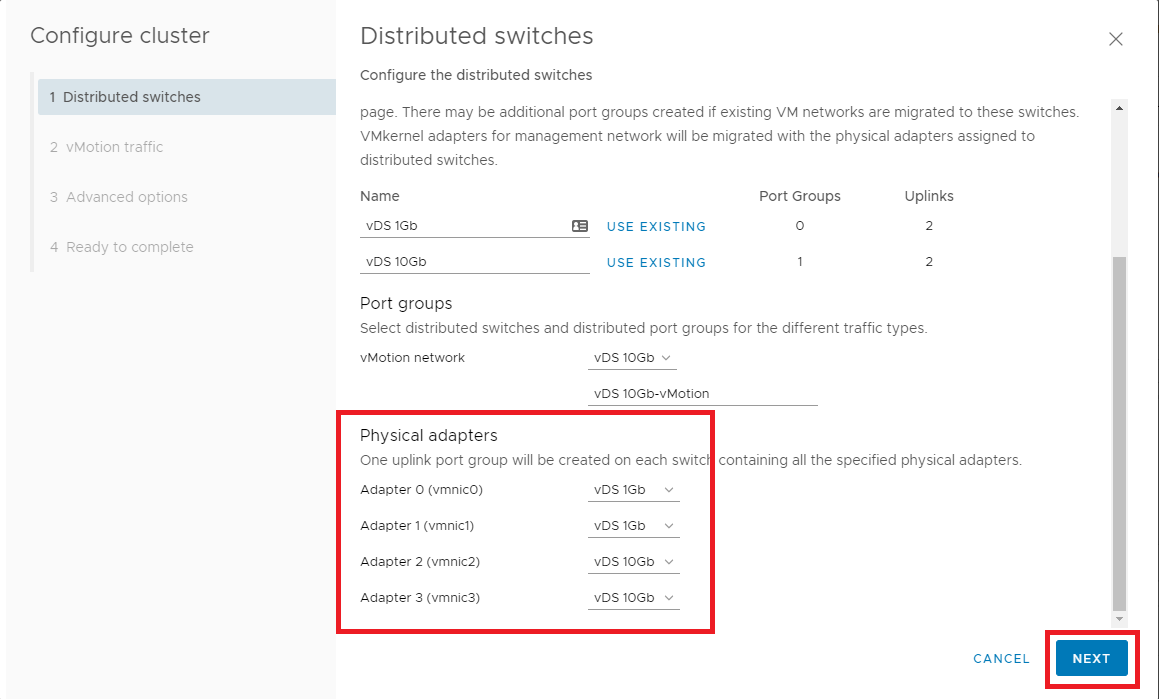

Figure 25 I want to create a vDS for each pair of NICs and name them in a way to associate them with the NIC port speed.

Using the scrollbar, scroll down so you can see the Distributed switches and Physical adapters on one screen.

As shown in Figures 26 and 27:

- From the Number of distributed switches dropdown, select 2

- Give each distributed switch a Name

- Select which port group to use for vMotion

- Select the Physical adapters to match to each distributed switch

- When complete, click

Figure 26

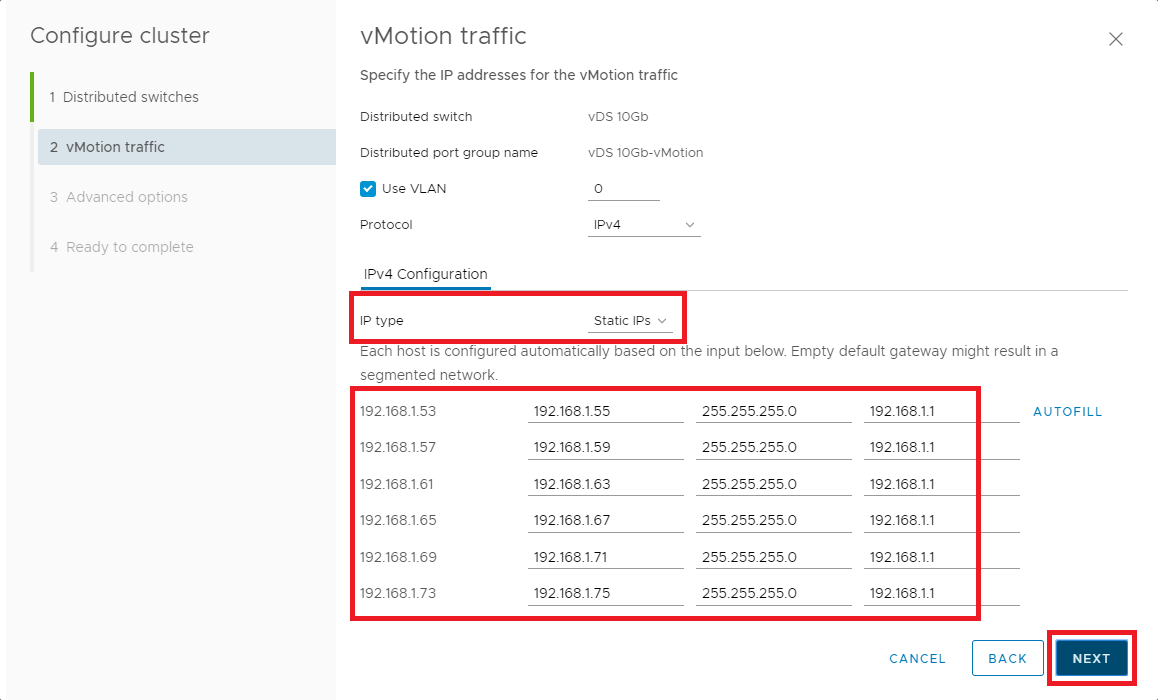

Figure 27 Change the IP Type to Static IPs and Enter the IP information for vMotion as shown in Figure 28 and click Next.

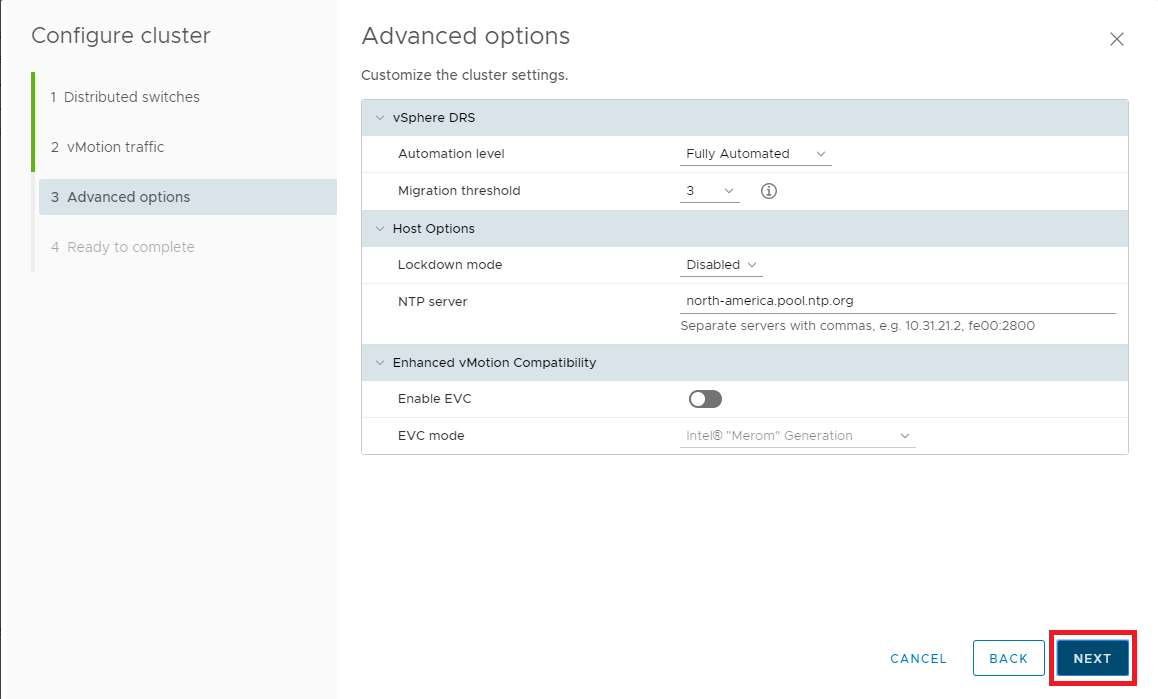

Figure 28 Select the appropriate options and click Next, as shown in Figure 29.

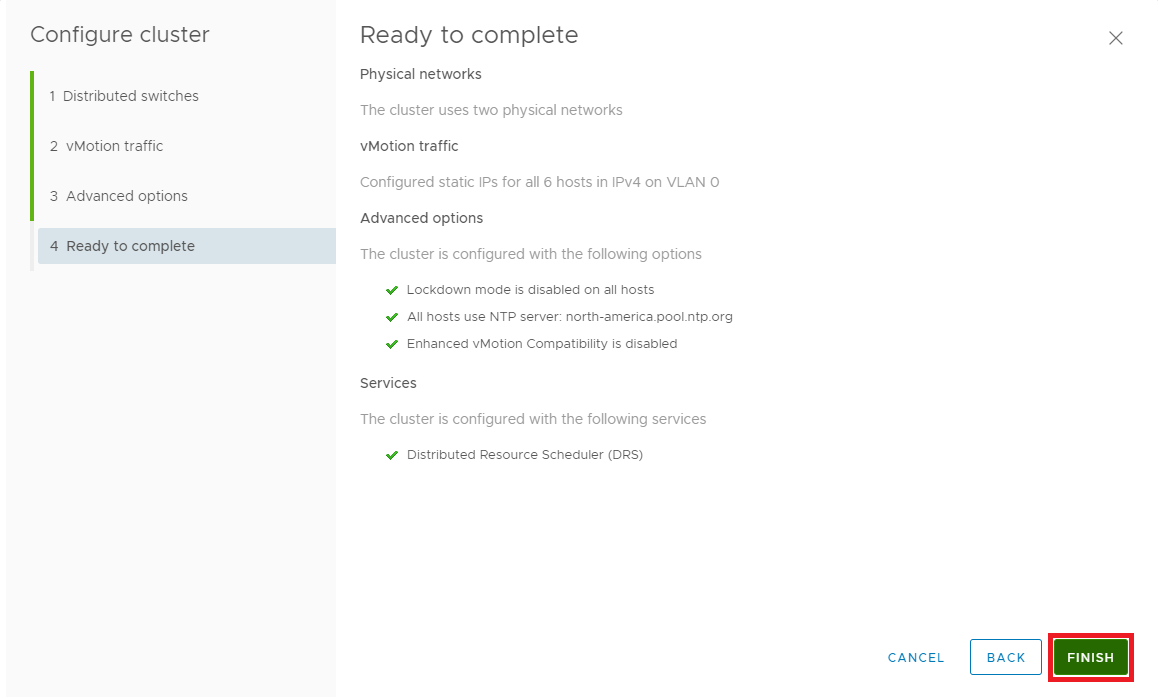

Figure 29 If all the information is correct, click Finish, as shown in Figure 30. If the information is not correct, click Back, correct the settings, and then continue.

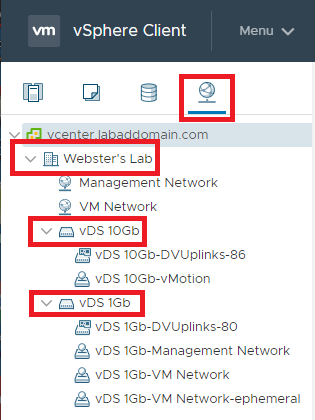

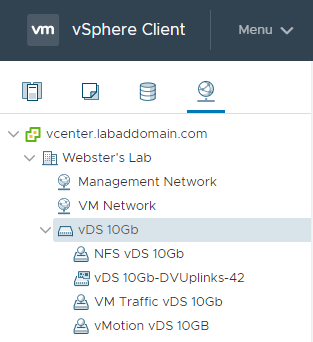

Figure 30 Click the Networking icon, expand the cluster, and each vDS, as shown in Figure 31.

Figure 31 I plan to use the vDS 10Gb switch for VM, vMotion, and Storage traffic. To accomplish that, two additional Port Groups are required. The vMotion Port Group was created earlier.

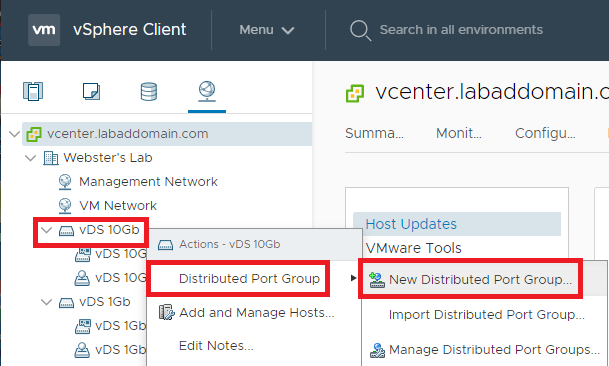

Right-click the vDS 10Gb switch, select Distributed Port Group, select New Distributed Port Group…, as shown in Figure 32.

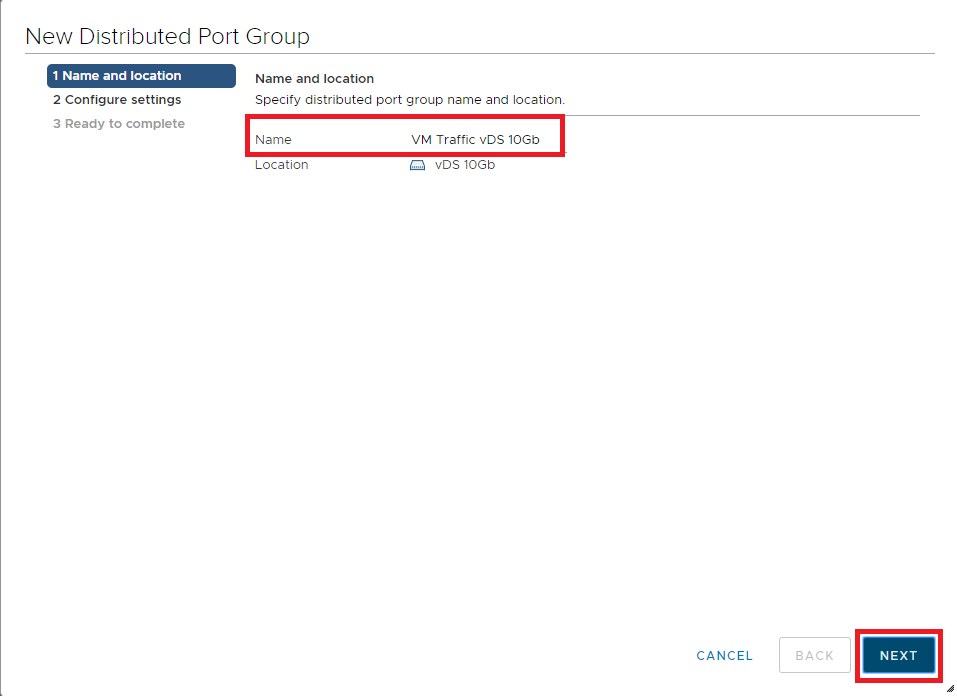

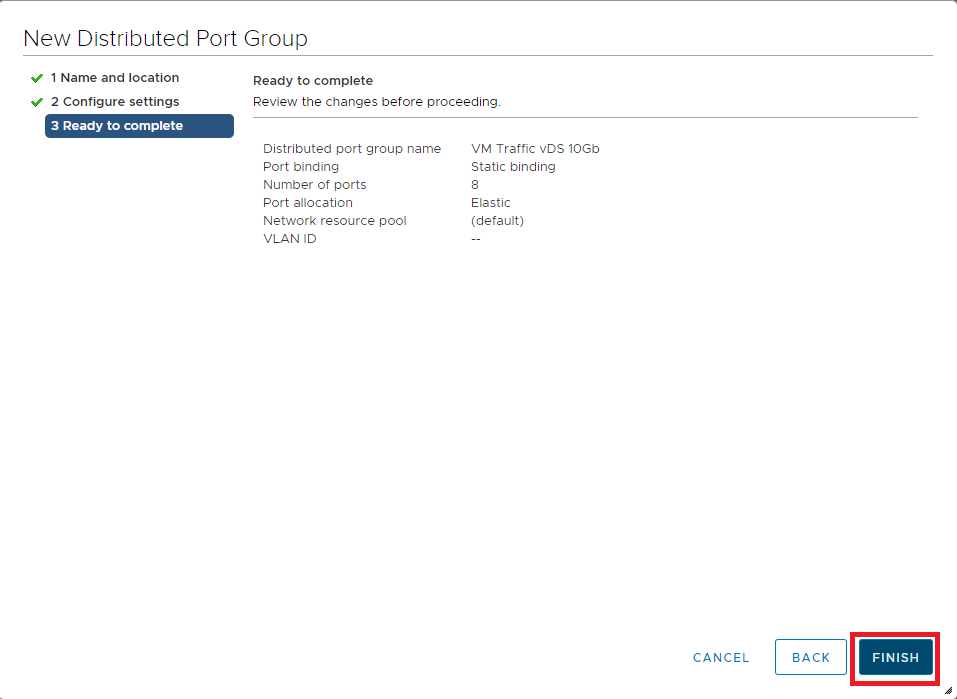

Figure 32 The Name for this port group is VM Traffic vDS 10Gb, click Next, as shown in Figure 33.

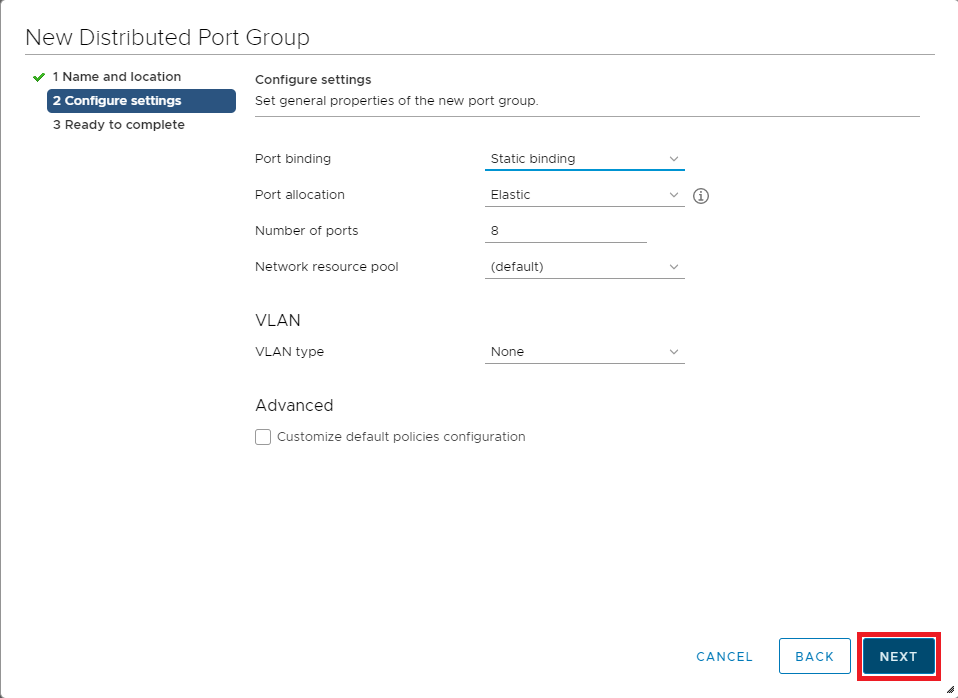

Figure 33 For my lab, the default general properties are good. Click Next, as shown in Figure 34.

Figure 34 If all the information is correct, click Finish, as shown in Figure 35. If the information is not correct, click Back, correct the information, and then continue.

Figure 35 Repeat these steps to create an additional port group with the Name: NFS vDS 10Gb.

When complete, the Distributed Port Groups should look like Figure 36.

Figure 33 vMotion and NFS storage require VMkernel NICs. The vMotion VMkernel NIC was created earlier as part of the vDS creation wizard.

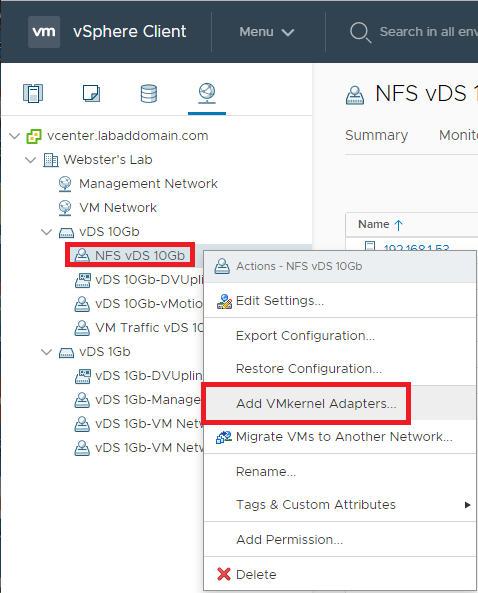

Right-click the NFS vDS 10Gb distributed port group and click Add VMkernel Adapters…, as shown in Figure 37.

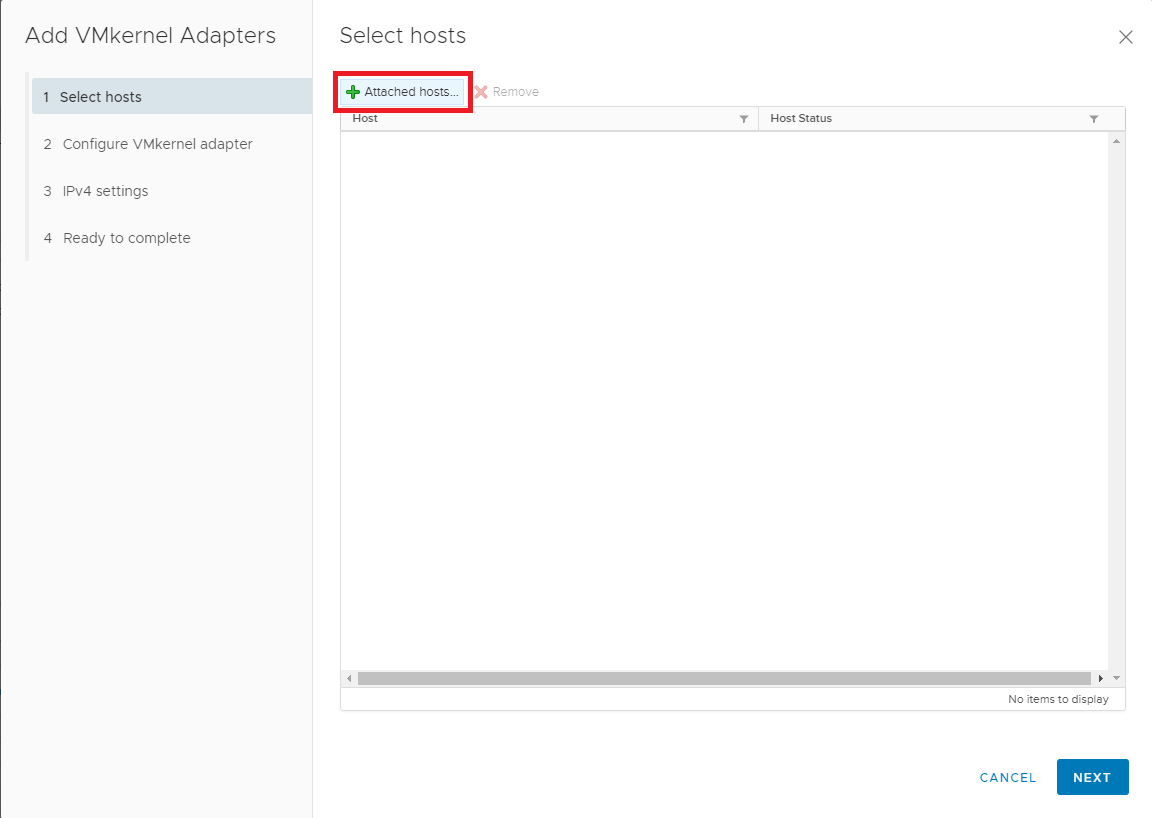

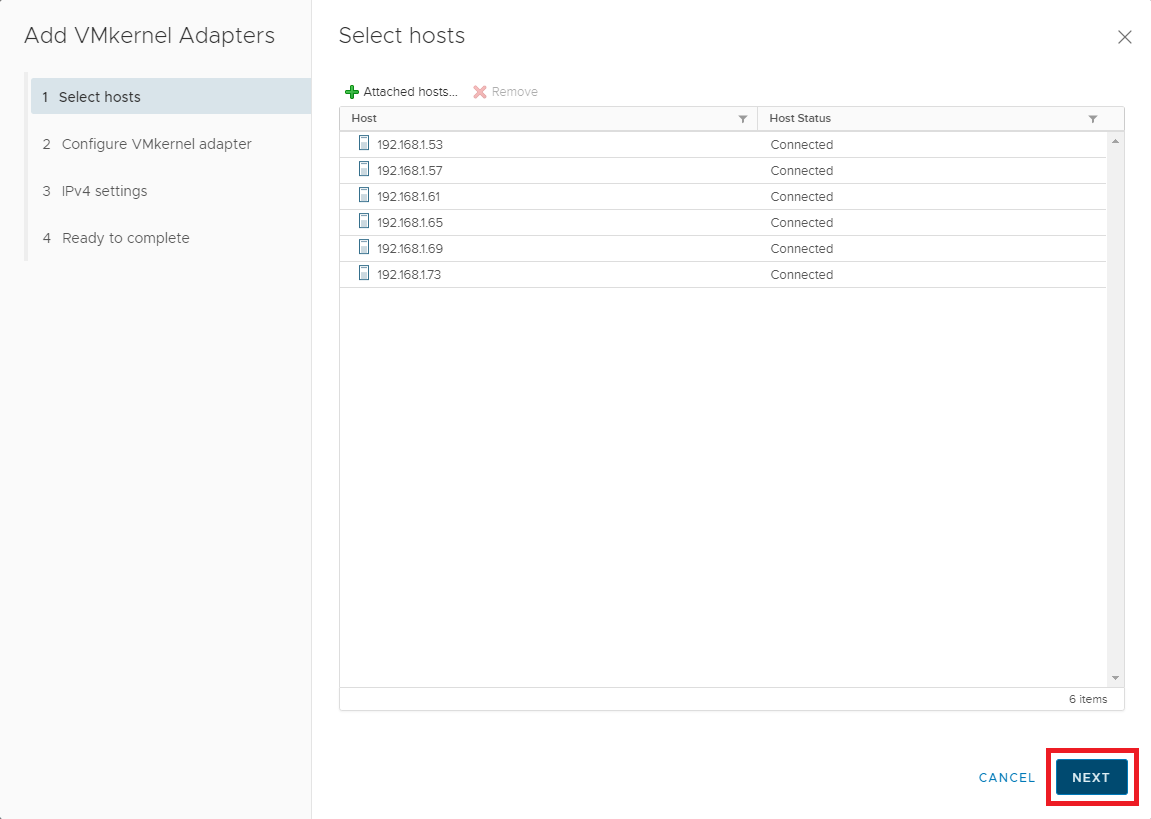

Figure 37 Click Attached hosts…, as shown in Figure 38.

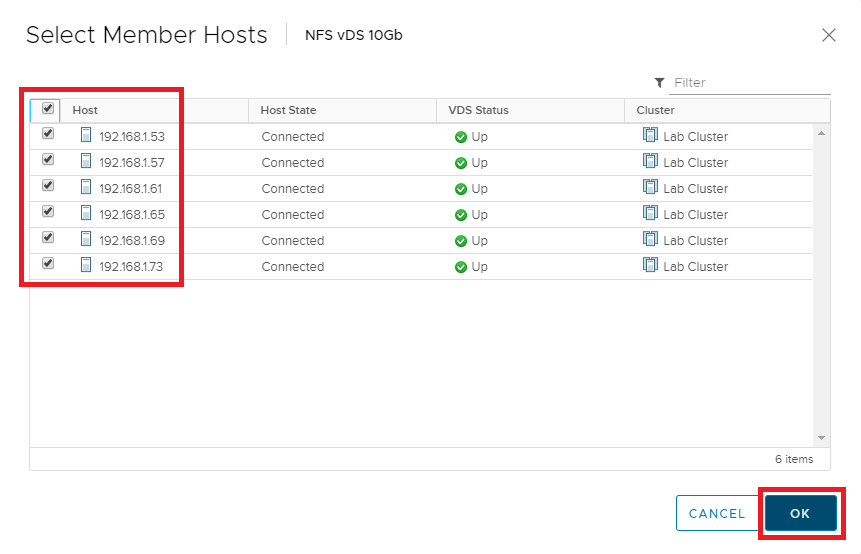

Figure 38 Select all hosts and click OK, as shown in Figure 39.

Figure 39 Click Next, as shown in Figure 40.

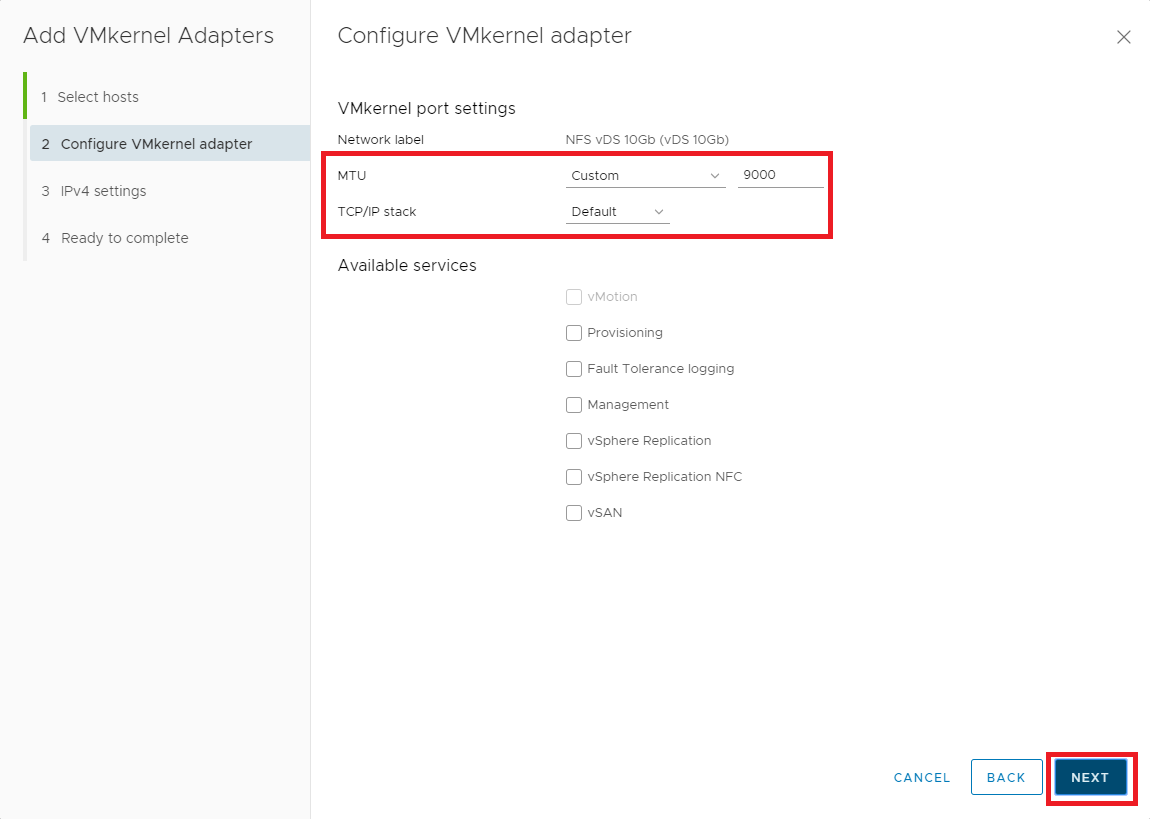

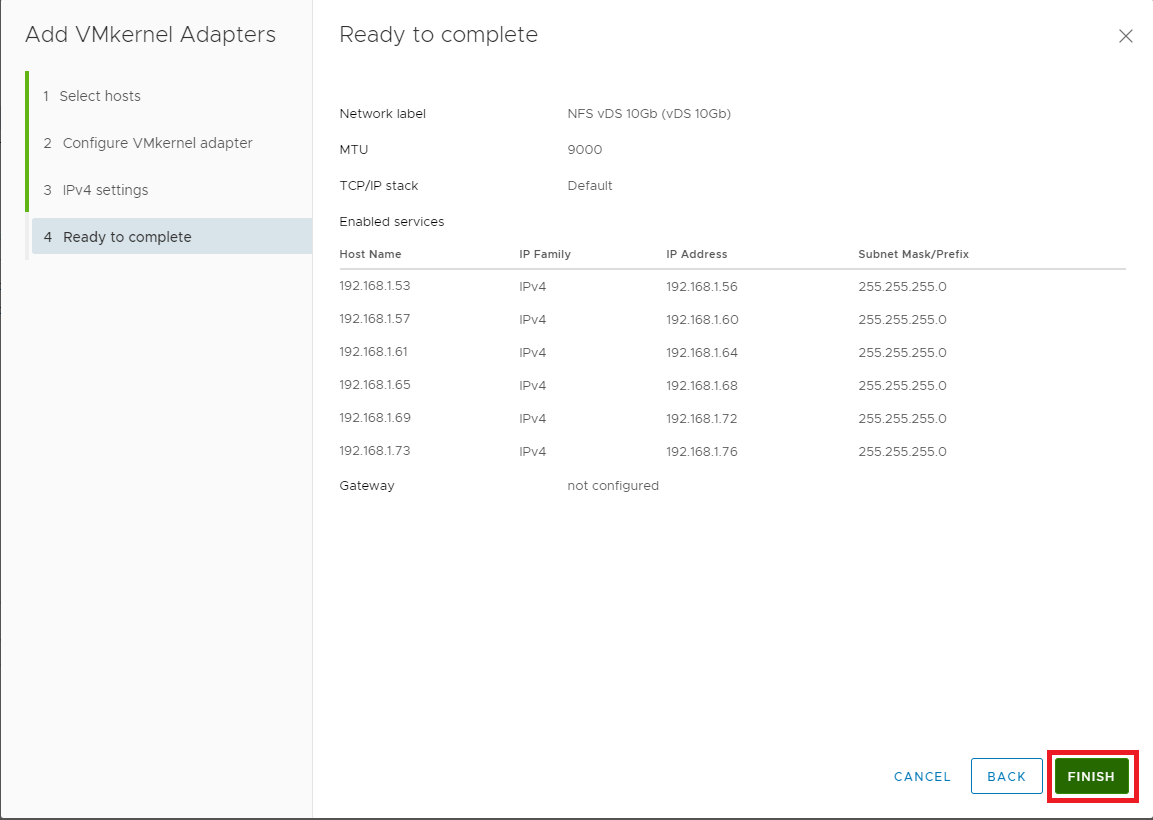

Figure 40 Enter the following information:

- MTU: Enter the MTU for your 10G switch (typically 9000)

- TCP/IP stack: select Default from the dropdown list

- Available services -> Leave all unselected

Click Next, as shown in Figure 41.

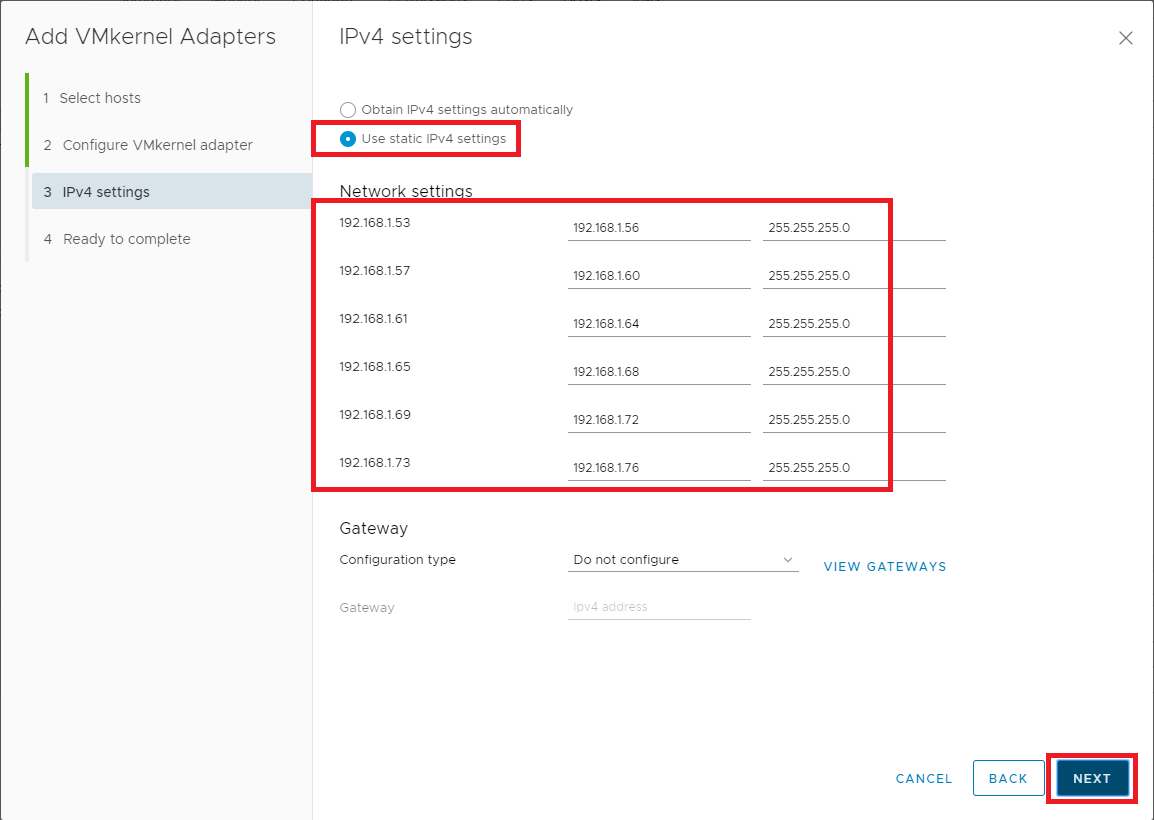

Figure 41 Select Use static IPv4 settings. For each host, enter the IP address and subnet for the NFS VMkernel and click Next as shown in Figure 42.

Figure 42 If all the information is correct, click Finish, as shown in Figure 43. If the information is not correct, click Back, correct the information, and then continue.

Figure 43 Now that our networking is complete, let’s move on to configuring NFS Storage.

Click Storage, as shown in Figure 44.

Figure 44 First up is an NFS datastore to hold VMs.

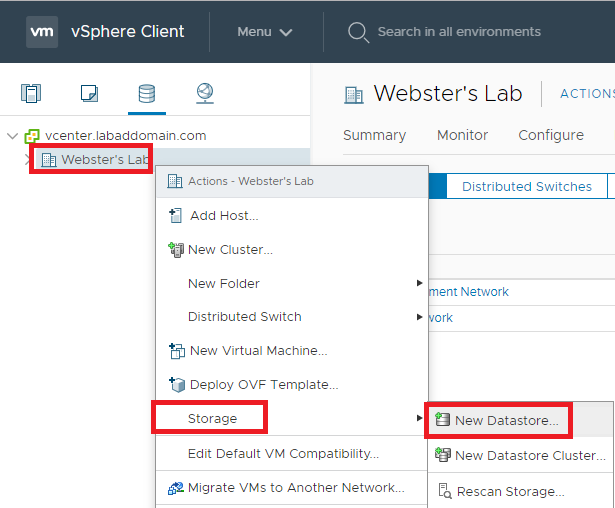

Right-click the cluster, click Storage, click New Datastore…, as shown in Figure 45.

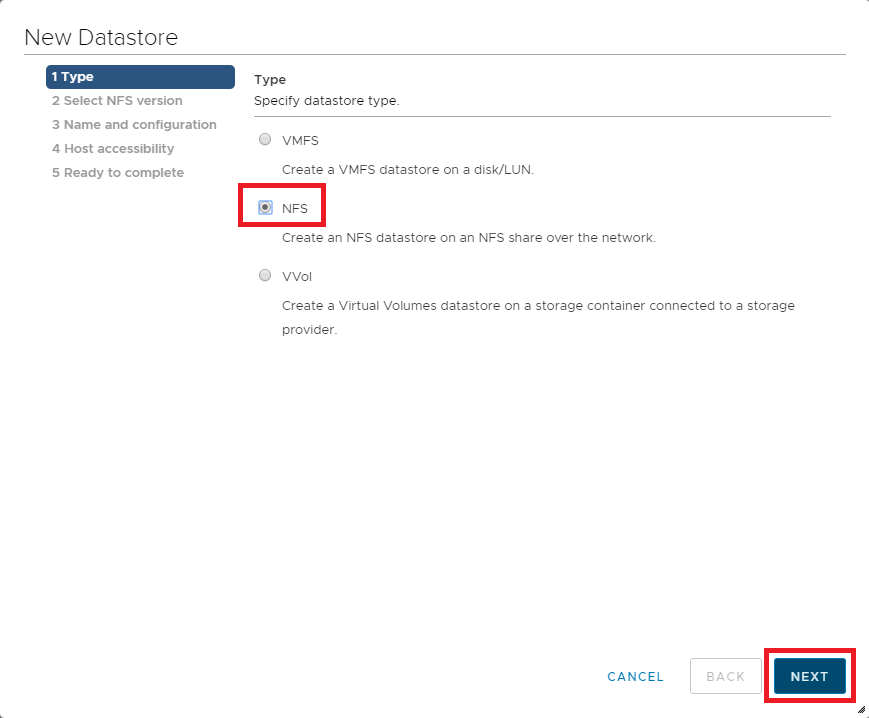

Figure 45 Select NFS and click Next, as shown in Figure 46.

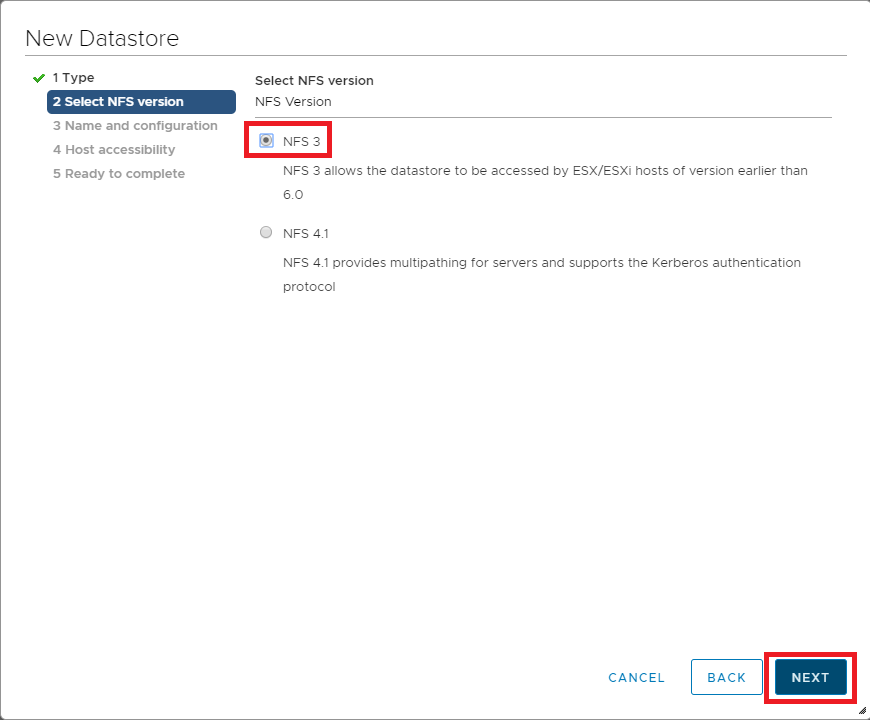

Figure 46 For my lab, I could only get NFS version 3 to work.

Select NFS 3 and click Next, as shown in Figure 47.

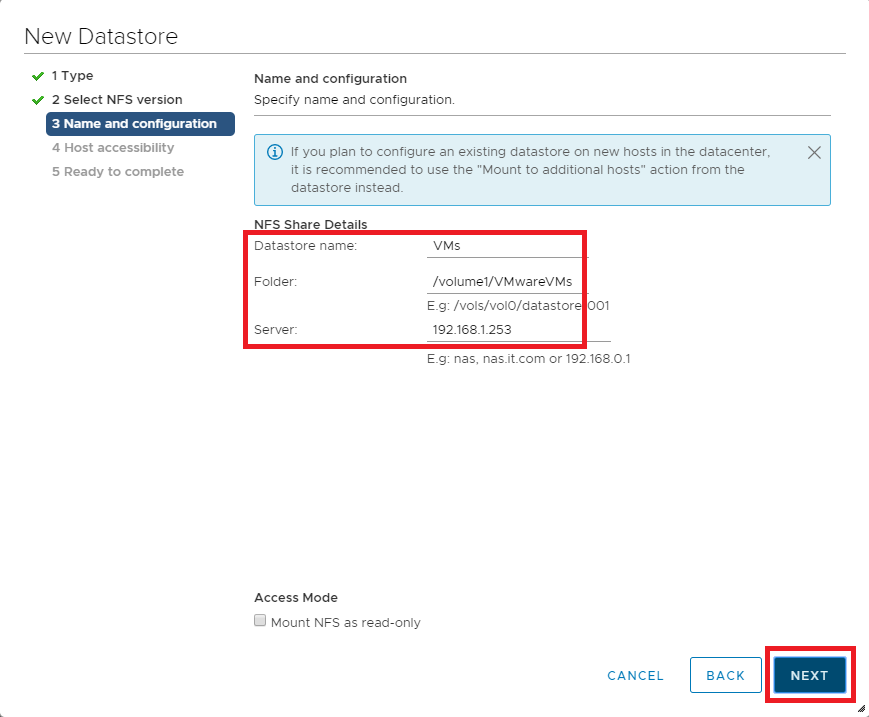

Figure 47 Enter a Datastore name, the Folder on the NFS server, the NFS Server name or IP address, and click Next, as shown in Figure 48.

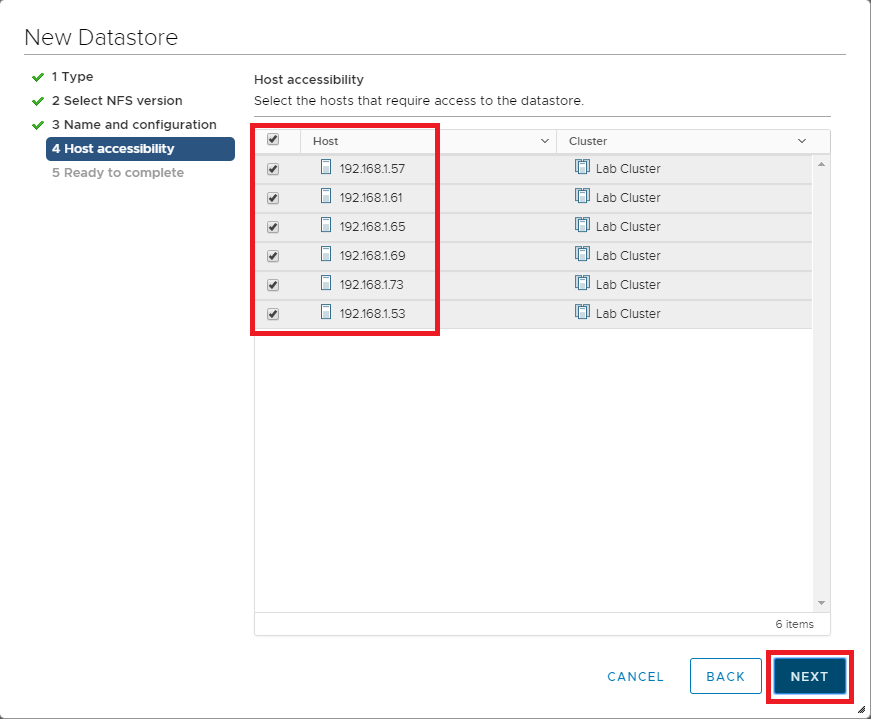

Figure 48 Select all hosts in the cluster and click Next, as shown in Figure 49.

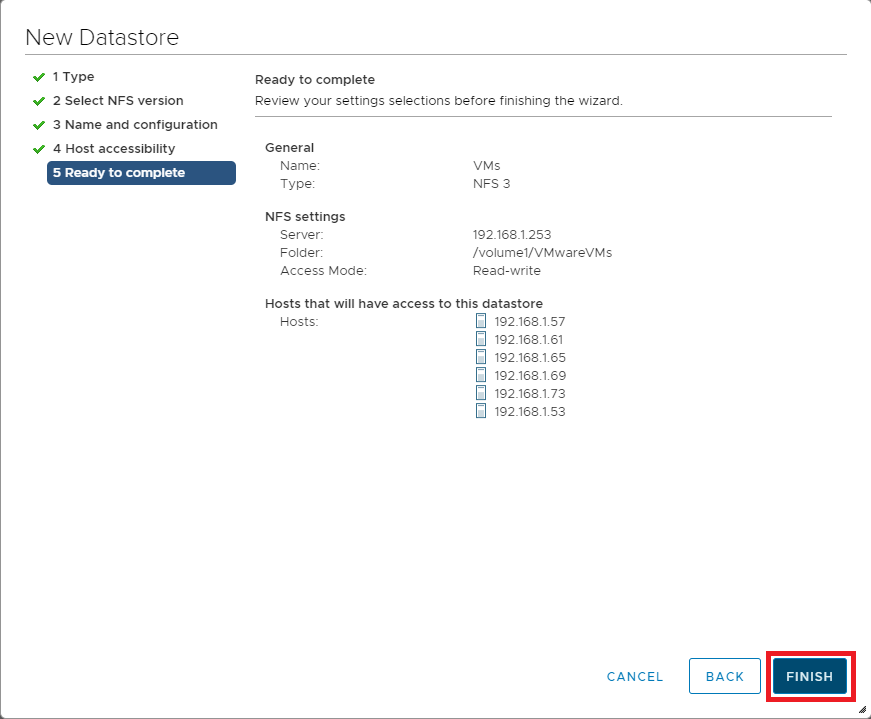

Figure 49 If all the information is correct, click Finish, as shown in Figure 50. If the information is not correct, click Back, correct the information, and then continue.

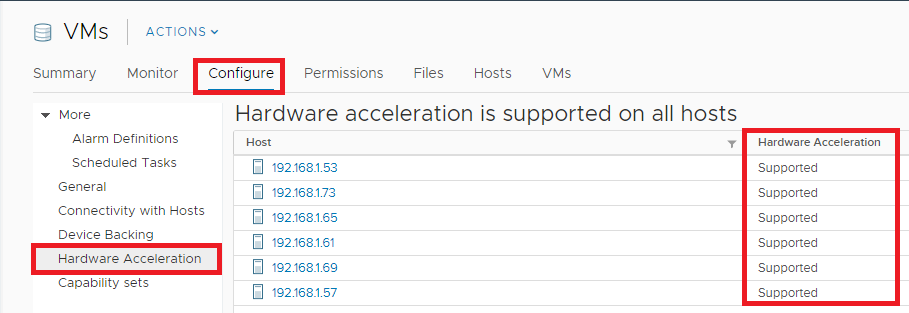

Figure 50 To verify that VAAI is enabled for the datastore, click Configure and click Hardware Acceleration, as shown in Figure 51.

Figure 51 Repeat the steps outlined in Figures 45 through 50 to create an NFS datastore to contain ISOs.

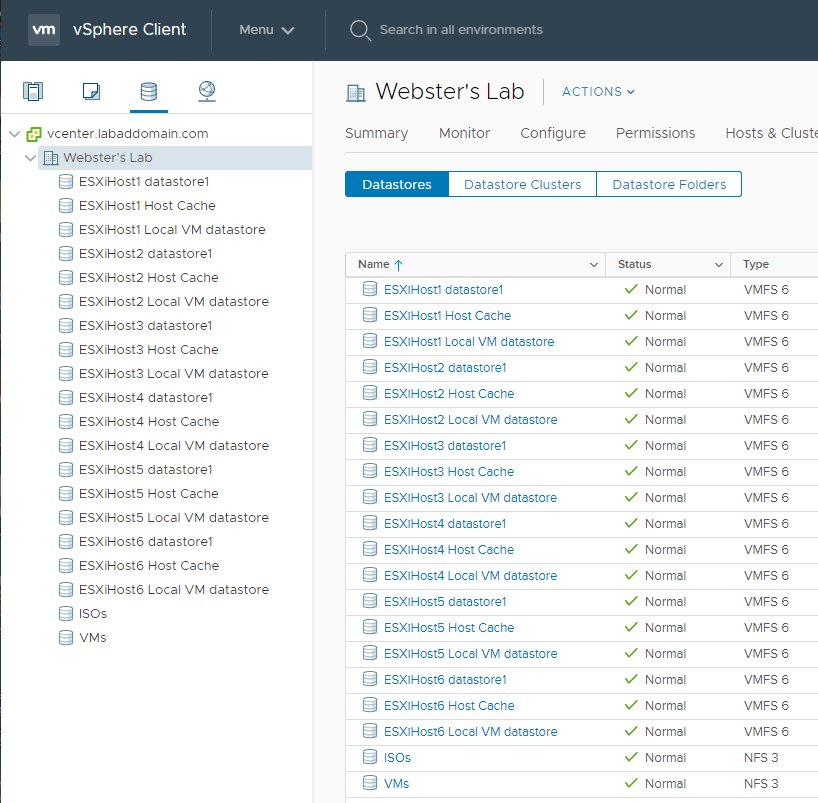

After creating all datastores, they appear in vCenter, as shown in Figure 52.

Figure 52 To verify the NFS ISO datastore, upload an ISO to the datastore.

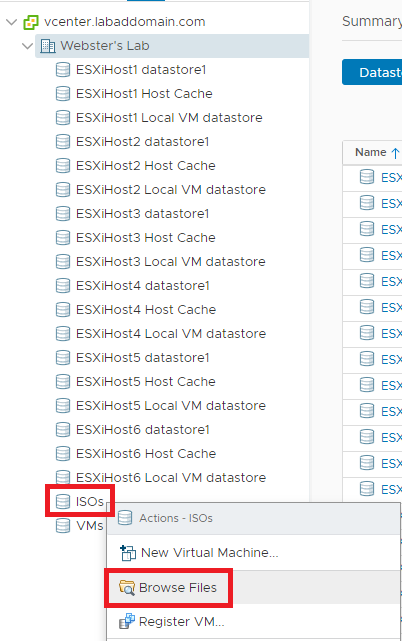

Right-click the ISOs datastore and click Browse Files as shown in Figure 53.

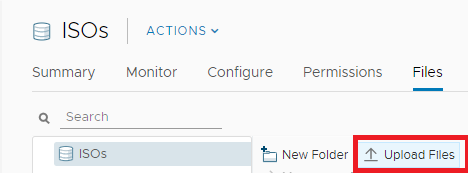

Figure 53 Click Upload Files as shown in Figure 54.

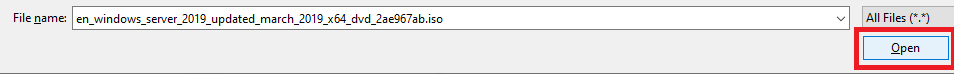

Figure 54 Browse to an ISO file, select it, and click Open as shown in Figure 55. I am uploading a Windows Server 2019 ISO.

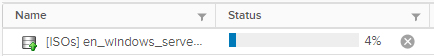

Figure 55 The ISO file starts uploading to the ISOs datastore, as shown in Figure 56.

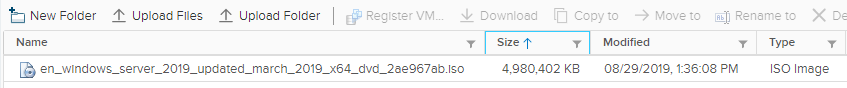

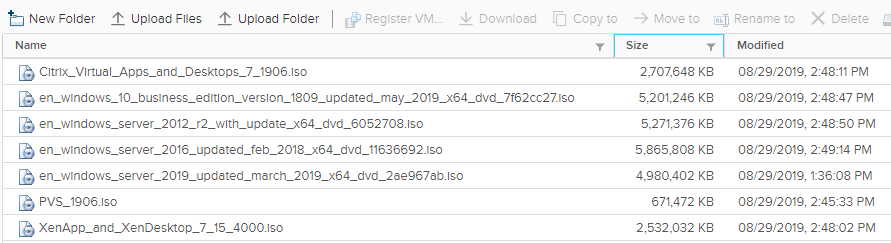

Figure 56 Once the ISO file upload is complete, the ISOs show in the datastore, as shown in Figures 57 and 58.

Figure 57

Figure 58 Next up: Additional vCenter Configuration.

September 17, 2019

Blog, VMware